From Black Box to Glass Box: Justice Maheshwari Calls for Transparent AI in Courts

Navarasa and the Constitution: Justice Maheshwari on Why Sentencing Cannot Be Automated

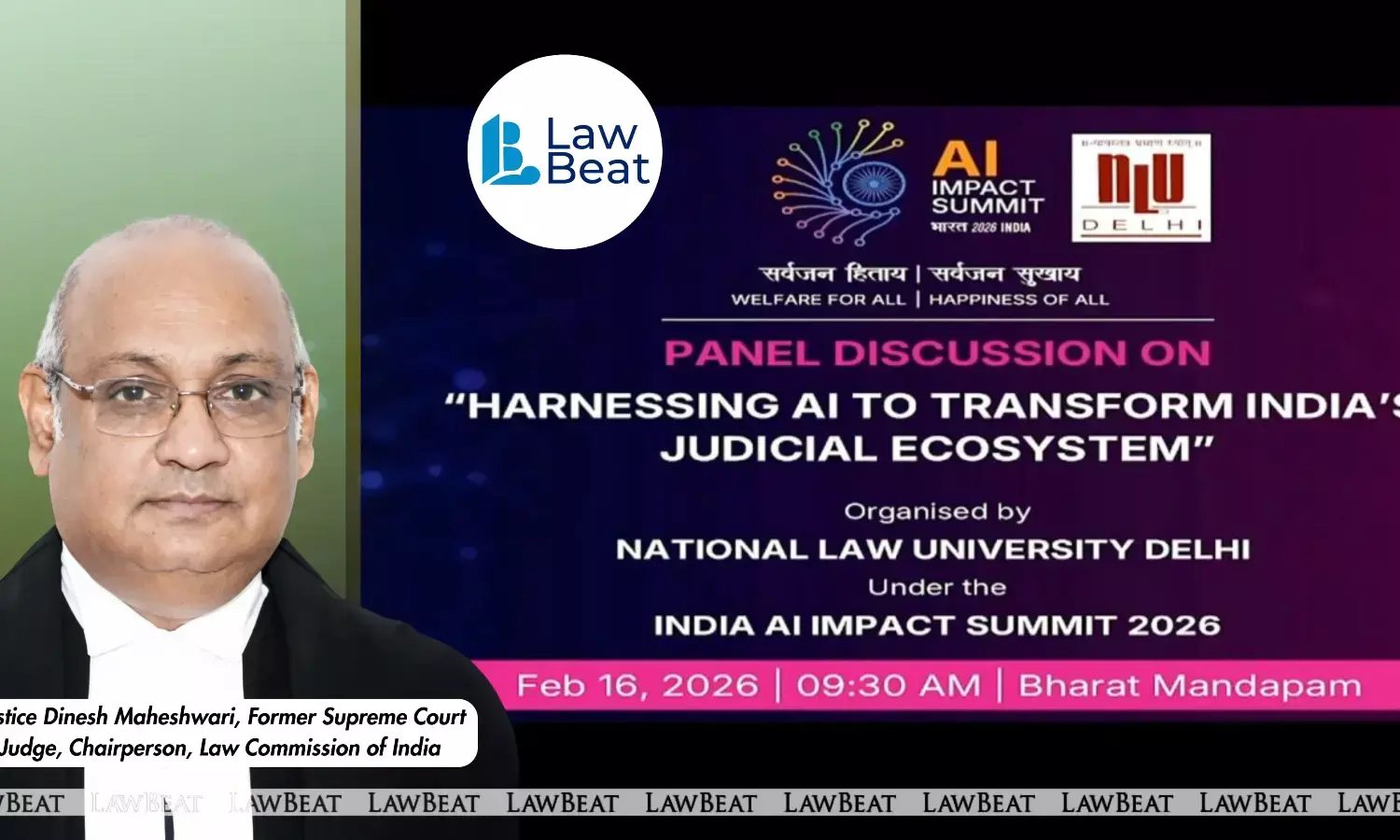

In a panel discussion on Harnessing AI to transform India’s Judicial Ecosystem, at the India AI Impact Summit, 2026, Justice Dinesh Maheshwari, former Supreme Court judge, Chairperson, Law Commission of India, underscored both the promise and the perils of technological advancement, cautioning that while machines may assist courts, the ultimate responsibility of delivering justice must remain human.

Opening his remarks in the context of discussions on “how to integrate AI into the judicial system while ensuring constitutional values at the same time,” Justice Maheshwari observed that human beings possess “the capacity to learn and support the capacity to develop the powerful AI or any machine.” At the same time, he stressed the need to check the depth and understanding of the community to learn or at least to block what should not be learned or what should not be used.

His concern was not abstract. He warned of AI’s growing propensity not just to generate fake images and deepfakes, but even fabricated court records, citations and judicial decisions. In a system anchored in authenticity and precedent, such distortions strike at the very foundation of judicial credibility. Though he noted that AI could be regulated by counter-systems designed to block false creation, he cautioned that the possibilities remain vast and evolving demanding constant vigilance.

Justice Maheshwari drew a sharp distinction that lay at the heart of his address: “There is always a difference between disposal of the matter and delivering of the justice in a court.” For him, this difference is foundational. The effort of building “a good system” must be directed toward “delivering justice in a court,” not merely statistical disposal.

On the question of pendency and backlog, he recognized that machines can assist meaningfully. Where background or pendency etc. are of concern, and where matters are taken up as a first of the day, technology can help. "The machines help us to an extent to build and generalize the things properly, we are indeed using them and we are going to use them more,” he said. Yet he cautioned against crossing a line, “helping the machine build to articulate the conclusion” or “leading us to a particular” outcome. “So, there has to be a difference,” he emphasized.

Illustrating this boundary, Justice Maheshwari referred to ongoing discussions on sentencing policy in criminal justice system and justice policy in India. After conviction, judges examine all the aggravating and mitigating factors in order to give the appropriate sentence. That particular decision making, he insisted, “will be a human decision making only because that has taken into account a variety of factors.” Sentencing, by its nature, cannot be reduced to algorithmic output.

In a philosophical turn, Justice Maheshwari invoked Indian aesthetics and the concept of “Navarasa”, the nine human emotions: “Starting from love… laughter, sorrow, anger, energy, fear, despair, wonder, and finally peace.” These, he said, “are human sentiments and they are integral part of decision making that we are dealing with humanly.” The human brain, “capable of empathy,” engages with these emotional dimensions in ways a machine cannot replicate.

Thus, while courts may take the help from machine for shifting across and putting things in order, the “final decision-making definitely remains with the adjudicator.” The adjudicator’s role is not merely procedural but moral and constitutional.

Justice Maheshwari ended on a note that cut to the core of the constitutional promise. For those entrusted with upholding constitutional morality, technology in courts cannot function as an opaque “black box” whose reasoning is hidden from scrutiny. Any system adopted by the judiciary, he stressed, must transform into a transparent “glass box” which is open, accountable and intelligible to all. In a justice system founded on reasoned orders and public trust, opacity is incompatible with legitimacy.

Event: India AI Impact Summit, 2026

Date: February 16, 2026